Using Prompt Methodology for AI Visibility Optimization

The Skinny: Traditional keyword research is evolving into a Prompt Methodology that targets full-sentence, natural-language queries used in AI search. Success depends on optimizing for user personas and “intent families” rather than specific platforms, ensuring your brand is cited as a trusted source. By hosting authoritative, first-party content on your own domain, you move from merely tracking visibility to actively influencing AI-generated answers.

SEO teams have always lived and breathed their keyword research.

We built methodologies for choosing them: user research, intent mapping, volume thresholds, difficulty scores, SERP features, business value. Then we pushed those keywords into rank trackers and dashboards and content strategies.

AI search works the same way at a higher resolution:

Prompts are the new measurement unit and they need a methodology just as rigorous as keyword research.

At the same time, measurement alone isn’t enough. Just as keyword research only became valuable once teams could act on it through content, AI search insights need an execution layer.

This is where many brands are starting to evolve their approach. Tinuiti partners with FERMÀT’s AI Search Commerce Engine to help teams move beyond tracking prompt visibility and toward operationalizing it by using first-party content to influence how and where brands appear in AI-generated answers.

The goal isn’t just to observe AI search behavior, but to turn prompt insights into something teams can actually use.

A key operational consideration in AI search is what happens after content is created — specifically how that content is deployed, hosted, and surfaced as first-party signals to AI models.

“With FERMÀT, the content we generate is first-party by default and deployed directly on the brand’s main domain. There’s no additional hosting, no separate CMS, and no handoff to engineering just to get content live. That matters because AI models place more trust in content that’s clearly authoritative, first-party, and connected to real product data. The trust is much harder to establish with agents and consumers when content lives off-domain or behind extra deployment layers.” – Josh Feiber, Engineering Lead, FERMÀT AI Search

Lots of tools will let you track how often your brand appears and is cited for specific prompts in ChatGPT, Google AI Mode, Perplexity, and more.

But none of that matters if your prompt list is a random selection or a quick ChatGPT prompt (no matter how many times you ask, it won’t know what people are searching for).

This article walks through a practical, repeatable prompt selection methodology you can plug into your existing SEO workflows and adapt to your business to make sure that no matter how you’re tracking, you can expand and extend your strategies.

Regardless of how you feel about AI visibility tracking or whether you view AEO/GEO/AI SEO as just an extension of SEO, I believe that this exercise forces you to think bigger about how users actually search now.

Stepping back from traditional keyword volumes and mapping full conversational journeys opens up richer opportunities for both on-site content and off-site influence. It sharpens your understanding of user intent in a world where questions are phrased naturally, decisions happen faster, and the “search results page” is no longer a page at all.

In classic SEO, you don’t start with the keywords you hope matter, you start with the business outcomes you need to support. That usually means asking:

Prompt selection follows the same logic. Before you generate a single prompt, define:

Having these clearly mapped gives you a filter for every prompt you consider. It lets you ask, “Will tracking this prompt help us understand the journeys and decisions that actually matter for the business?”

When prompt selection is grounded in outcomes, it also becomes easier to connect insight to execution. High-value prompts naturally map to priority categories, competitive gaps, and moments where content can influence decisions.

In practice, this approach allows teams to treat prompt research less like a static analysis and more like an ongoing workflow. This way prompts inform what content gets created, refreshed, or expanded, and performance is evaluated based on whether that content is actually referenced in AI responses.

Check out our most recent AI Study for exclusive insights.

One of the biggest mistakes teams make is trying to build an AI search strategy around specific platforms. With ChatGPT, Google AI, Perplexity, Gemini, and a dozen others evolving weekly, chasing platforms becomes endless and ineffective.

A better approach, and the one that actually scales while benefiting your traditional SEO strategies, is to optimize for personas.

Your users will adopt different AI assistants for different reasons: subscription access, built-in AI features, device preferences, UX comfort, or simple habit. Some will stick with Google. Some will ask everything in ChatGPT. Others will interact with AI only through social platforms or their operating system.

When you build your prompt strategy around personas:

This mindset is the foundation of a strong prompt methodology: The person matters more than the platform.

Use persona-driven research to pinpoint high-priority topics and build a prompt set strategy that better aligns with real user behavior

This type of mapping highlights patterns across personas — and those shared needs become your highest-value prompts. It also reveals persona-specific questions that help you build deeper topical authority.

Once you’ve mapped your personas and the themes they’re likely to ask about, the next step is turning those journeys into a structured set of topics. This is where your keyword research becomes useful not as the starting point, but as a way to confirm the demand behind the topics you’ve already identified.

Your existing keyword data helps you understand:

Instead of rewriting keywords one-by-one into prompts, focus on topic clusters that consistently show up in both your persona mapping and your keyword insights. Those clusters become the foundation of your prompt universe.

At this stage, your job is simply to validate: Do these topics align with real user behavior and real demand?

There are research tools that can help uncover additional prompt angles and intent variations based on how LLMs contextualize your category but the core inputs should always trace back to your personas.

This approach keeps your prompt universe anchored to meaningful topics, grounded in validated demand, and directly aligned with the questions your audience actually explores in conversational search.

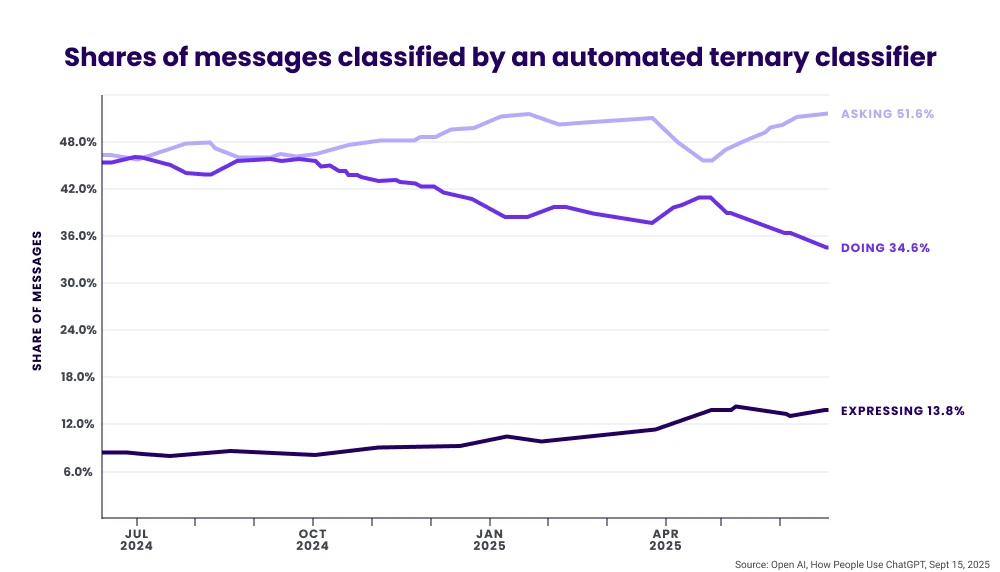

One of the biggest challenges in AI search today is that there is no true equivalent to keyword volume like we have in traditional SEO. There’s no public AI search volume, no query logs, and no unified view of how many times a prompt has been asked across platforms.

Most tools offering prompt volume metrics are doing the best they can with what’s available: they use large clickstream datasets to model conversational demand and project trends. That data can be directionally useful but it’s important to understand its limitations.

Modeled prompt volumes often don’t account for:

Because of this, I think of prompt volume tools as helpful signals, not absolute truth.

They’re best used to:

Used thoughtfully, these tools help you shape a more complete view of conversational demand just without the illusion of precision we’re used to in keyword research.

The goal is to lean in and understand how users talk and what kinds of prompts your personas are likely to ask as AI assistants become a default part of LLM search behavior.

Once you’ve validated your topics and understood the conversational demand behind them, the next step is organizing your prompts by intent. Just like keywords, prompts fall into recognizable behavioral patterns but AI assistants introduce entirely new intent types that don’t exist in traditional search.

Your prompt methodology needs to account for all of them.

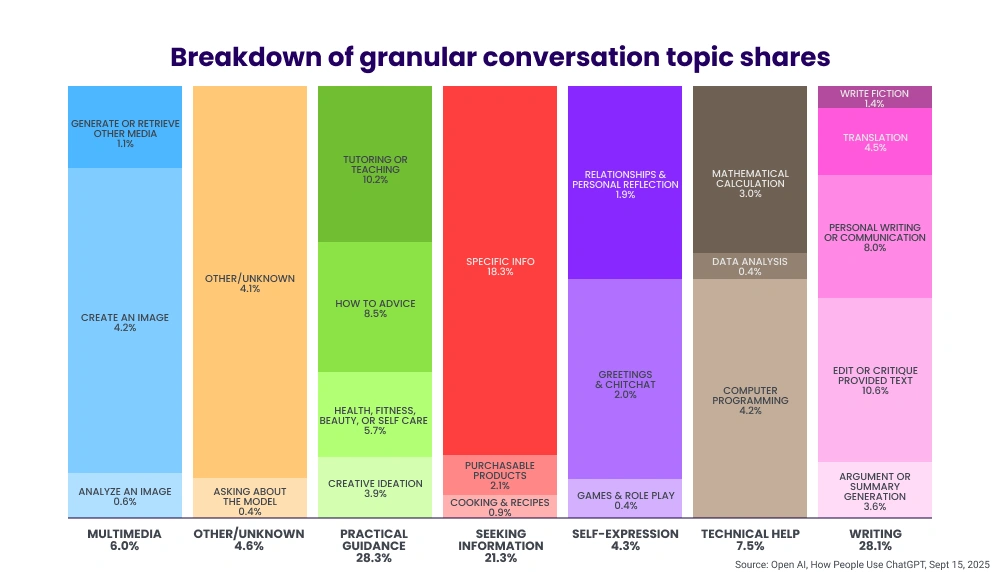

Traditional search is dominated by informational and navigational queries. ChatGPT, on the other hand, introduces two major new intent types:

These intent types dramatically change how users seek information and make decisions. To build a comprehensive prompt universe, you need a taxonomy that reflects both existing search intent and new AI-native intent.

| Intent Type | Example Search/Prompt | |

| Existing, Traditional Search Intent Types | Informational | “What are multivitamins?” |

| Commercial | “Best multivitamin for women over 30?” | |

| Transactional | “Where can I buy [Brand] near me?” | |

| Navigational | “Open my account dashboard for [Brand].” | |

| Comparative/Risk Assessment | “Is [Brand] legitimate?” | |

| New Intent types, Unique to AI Search | Generative | “Create a weekly meal plan for low carb.” |

| Planning & Strategy | “Build a study plan to prepare for the LSAT.” | |

| Creative Production | “Write a comparison guide between X and Y.” | |

| Exploratory/Open-Ended | “Help me decide if I need a CRM.” |

What makes these intent families especially important is that many of them don’t map cleanly to traditional SEO measurement. Generative, exploratory, and planning prompts often influence decisions without producing a click, a SERP, or a clear conversion signal.

That’s why more teams are starting to evaluate AI search performance through citation and reference behavior across models.

This lens makes intent-based prompt grouping more than a taxonomy exercise. It becomes a way to understand where a brand is shaping outcomes inside AI-driven decision journeys, even when those journeys never touch a traditional search result.

This classification helps you:

Once you know your categories, have validated them for demand, and identified the intent types you need to represent, it’s time to generate prompts.

This part should feel familiar as it mirrors the expansion stage of keyword research, but you’re working with natural language instead of queries.

Use multiple inputs so your prompt list reflects real user phrasing:

Not every prompt in your universe plays the same role year round. Some anchor your long-term strategy; others help you stay relevant as user behavior shifts.

These are the stable, evergreen questions tied directly to your products, category, and primary personas. They stay relevant year-round and define the foundational search journeys you always want represented.

These surge during specific retail moments, cultural cycles, or seasonal needs and should rotate in and out of your universe as behavior shifts.

Core prompts give you stability and long-term insight; cyclical prompts help you adapt to shifting interest and real-time behavior. A healthy prompt strategy blends both — steady coverage of what always matters, plus flexible coverage of what matters right now. Here’s how to maintain them:

This balance keeps your prompt universe strategic, relevant, and easy to manage.

There’s no magic number, but this is for coverage, not volume. The win comes from representing how users actually search across your categories, not from building an endless list of variations. You will exhaust your research (and it gets very expensive.)

Over time, you can:

The key is: start structured, scale intentionally.

Just like keyword research, a good prompt research strategy isn’t static.

Conversational habits evolve, new assistants rise, personas shift, and categories expand. Your prompt set should evolve with them.

Ongoing Prompt Refinement Tasks

A lightweight refresh cadence keeps your prompt universe aligned to real user behavior without reinventing the wheel every month.

As AI assistants reshape how people search, compare, and make decisions, the brands that win will be the ones who understand their audience and their brand deeply enough to show up in the questions, tasks, and conversations that actually drive intent.

A structured prompt methodology gives you that foundation. It turns “AI search” from a buzzword into a measurable, strategic part of your marketing engine—one that flexes across channels, personas, and platforms without losing its center of gravity.

If you want to dig deeper into how AI search is evolving, what’s coming in 2026, and how to build a durable strategy around it, our AI in Search Guide breaks it all down with predictions, frameworks, and examples you can use right now!